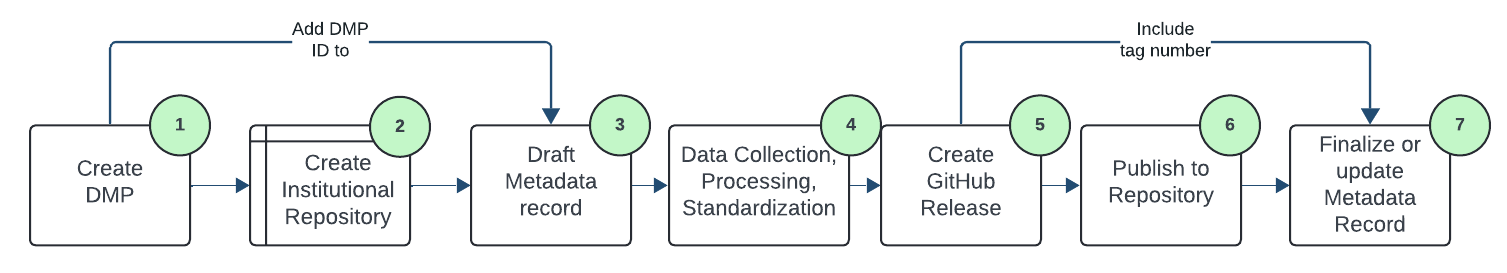

The 7 step Hakai Research Data Workflow

The guidelines below are intended for internal use by Hakai scientists and provide concrete data management and mobilization steps to be applied throughout their research project once an EOI is approved.

- Create a Data Management Plan in Google Docs (see Data Management Plans)

a. Share and modify the DMP if needed

b. Contact DM Team data@hakai.org for review

c. DM Team to mint a DMP DOI

d. DM Team to add DMP DOI to the project in the Tula Portfolio - Create a GitHub repository in the Hakai organizational account for the research

product.

a. If GitHub does not meet your interim data storage requirements, consider alternative institutional data holdings (see Data Services). Do not store data in your personal repository or drive

b. Use the Hakai-dataset-repository-template - For each research output, draft a metadata record for the Hakai Catalogue using the

Metadata Entry Tool

a. A metadata record can be submitted as a draft prior to data processing - Data collection, processing and standardization

a. Store raw and/or processed data in the Hakai Database (e.g., EIMS, NAS) or in the chosen institutional data repository - Finalize your data package and create a public GitHub Release

a. Make the visibility of your repository public

b. Create a GitHub Release

c. Copy the tag version number into the Metadata Entry Tool’s version number field

d. Link to the GitHub Release by including the URL as the Primary Resource through the metadata record - Publish your raw and/or processed data to the project’s chosen domain-specific or generalist data repository

- Finalize or update the metadata record(s) and submit for review

a. Add the DMP ID as a Related Work

b. Where applicable, add a link to the data in a generalist or domain-specific repository as a Related Work

c. If you have already published a metadata-only record, update it and contact data@hakai.org for review